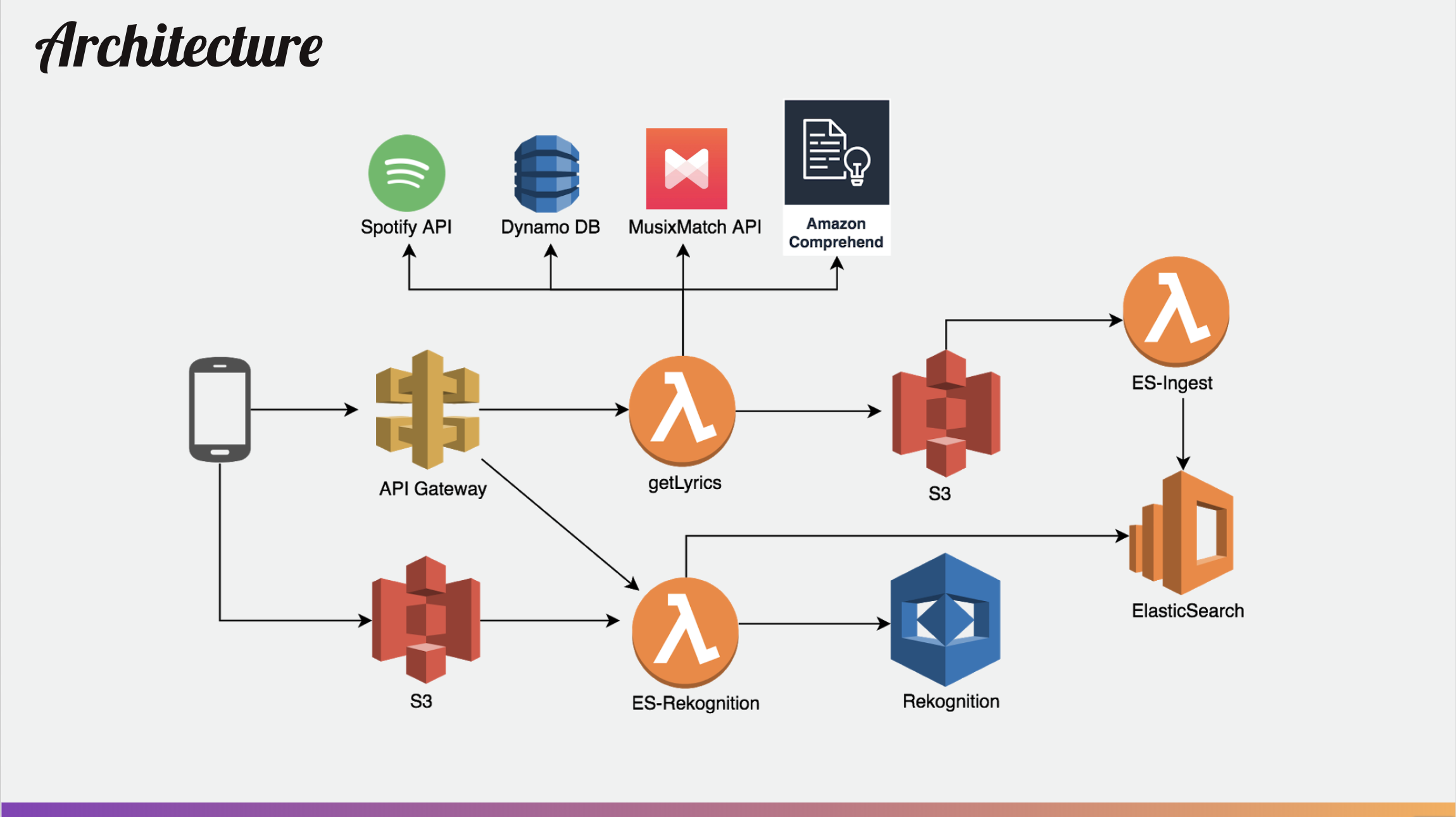

Caption Crunch is a serverless application that uses image recognition and NLP to output a relevant song lyric based on an image uploaded by the user.

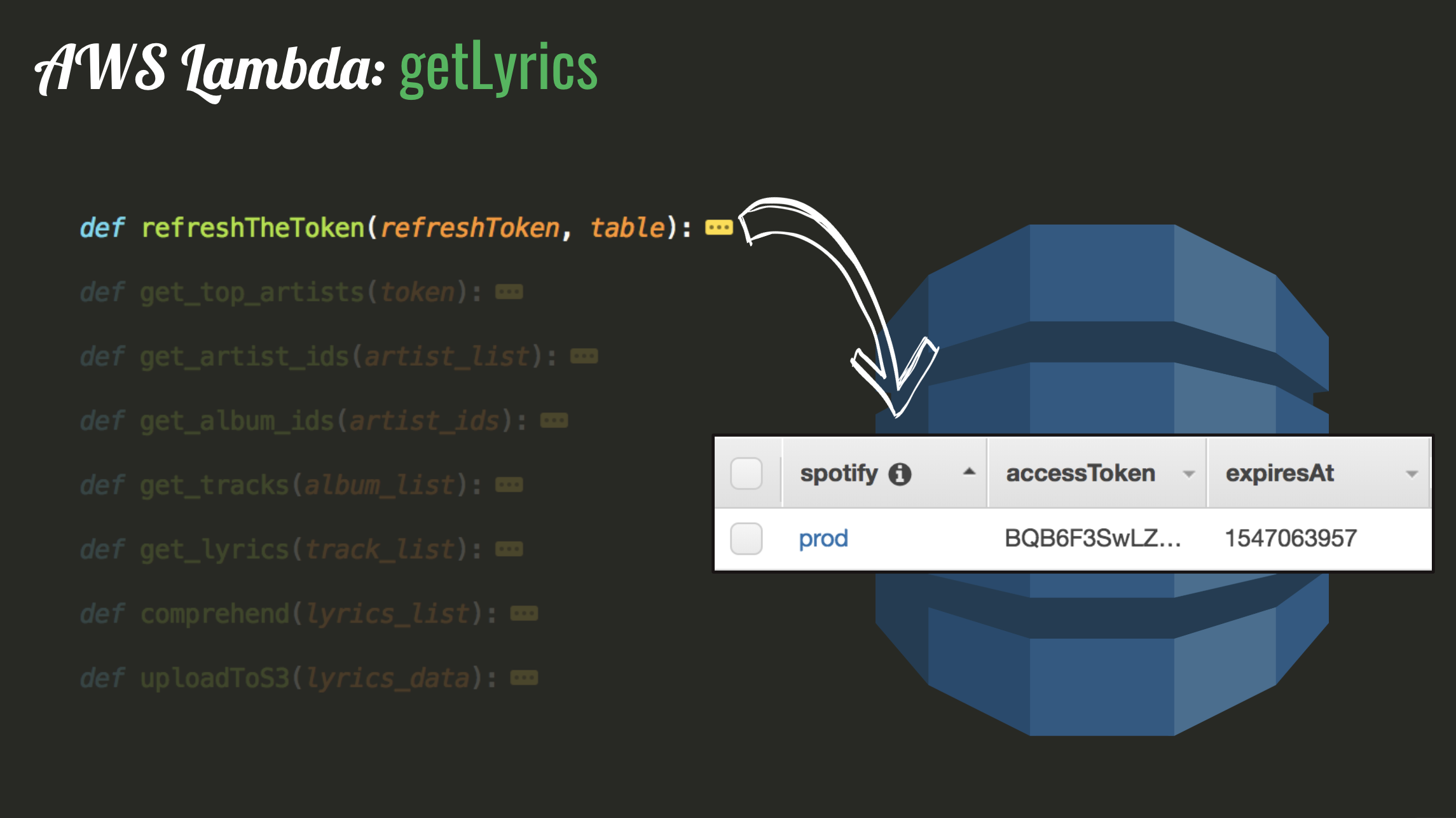

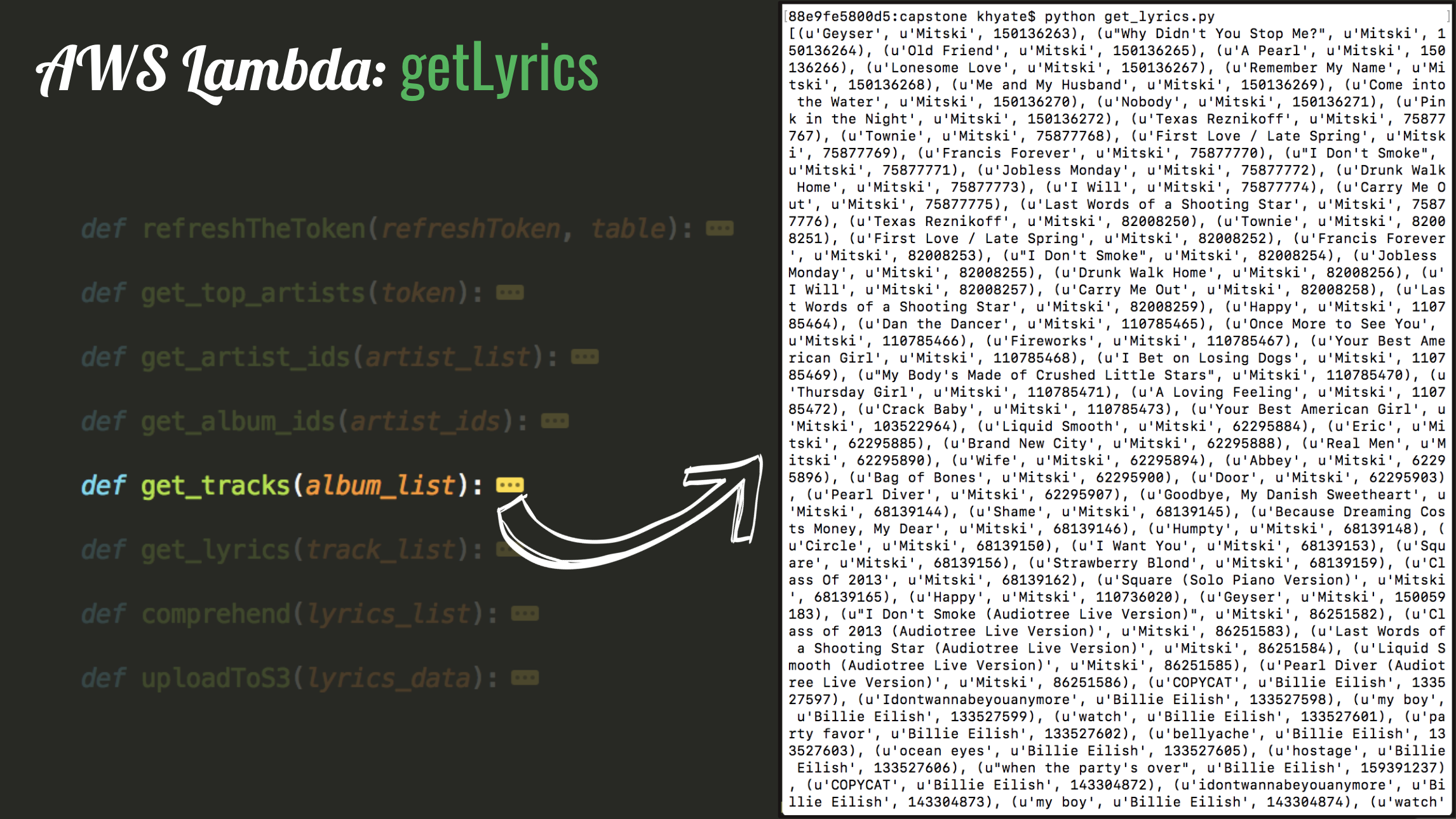

When a user clicks the Sign Up button, the getLyrics Lambda function is triggered through API Gateway. The first function of getLyrics is to authenticate into the user's Spotify account.

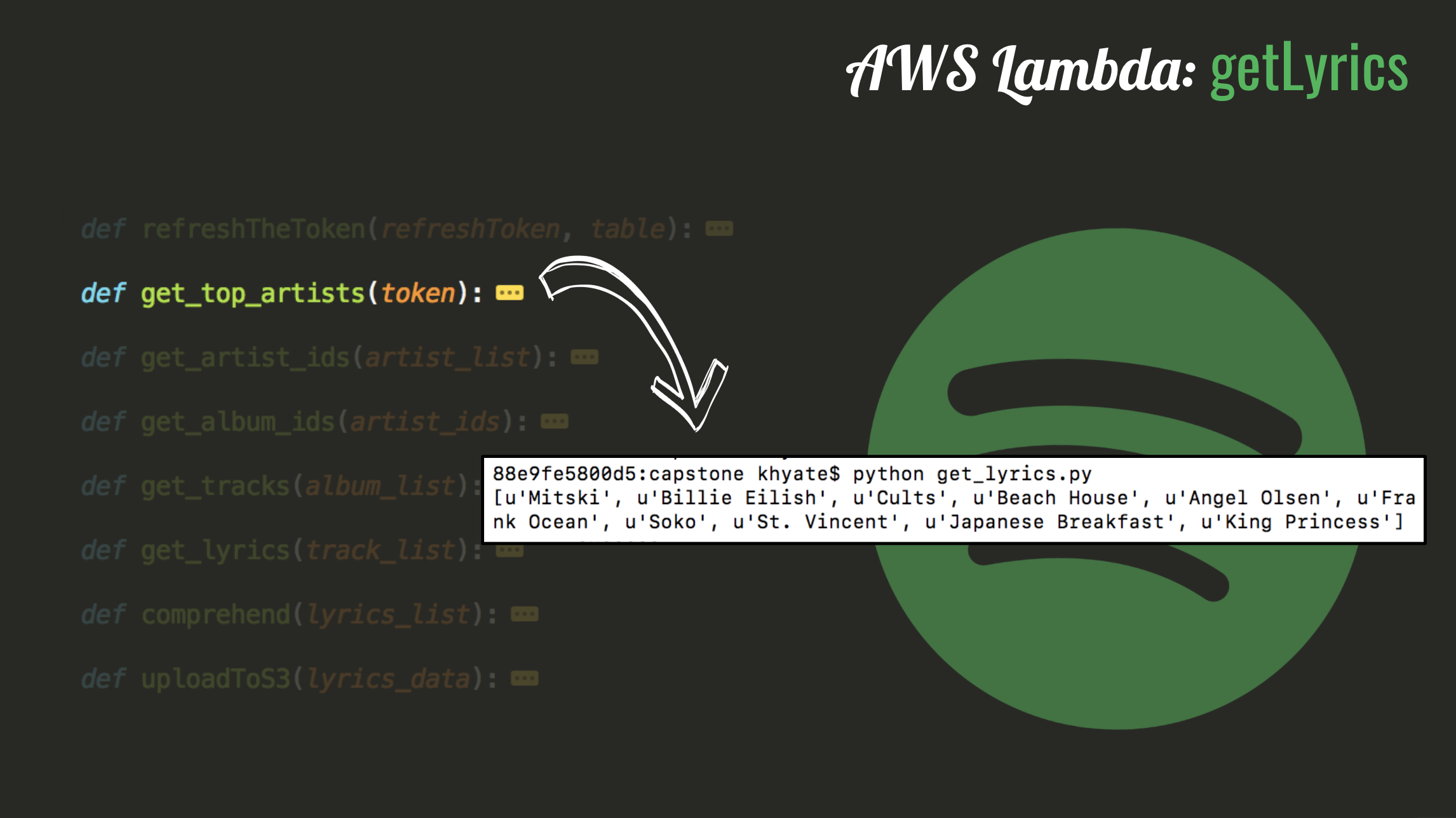

Next, getLyrics retrieves a list of the user's Top 10 Spotify Artists.

Each of these artist names is then sent in a request to MusixMatch, which is a massive online database of song lyrics with a developers API endpoint.

From MusixMatch, the function can iteratively retrieve each Track Name, of each Album, of each of the user's Top 10 Artists. Finally, each Track Name is passed through MusixMatch to retrieve a massive set of song lyrics.

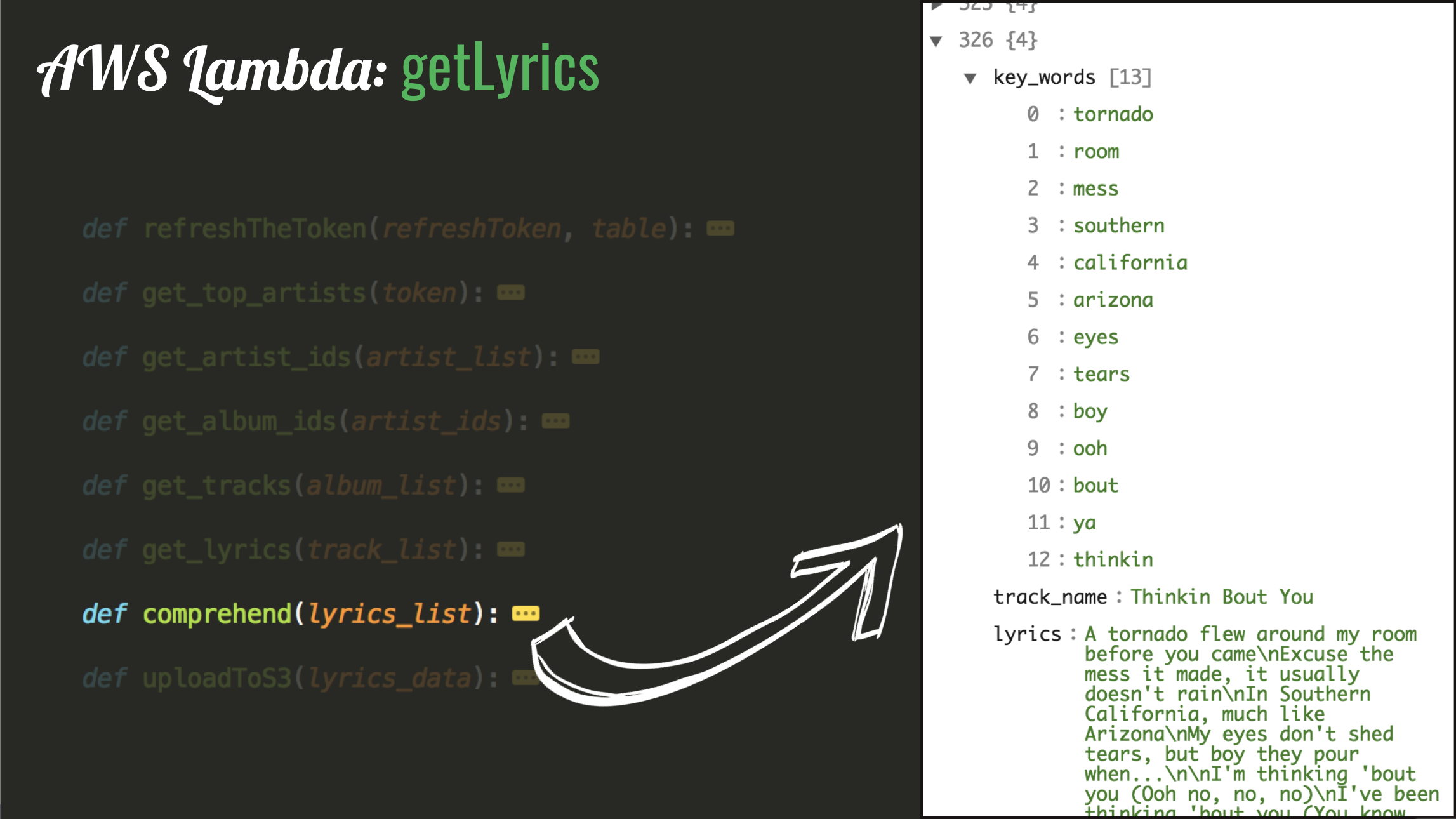

Next, each set of lyrics is sent through Amazon Comprehend, which is used for different types of NLP. For this use case, Comprehend retrieves a set of Key Words from the song lyrics, based on frequency and figure of speech.

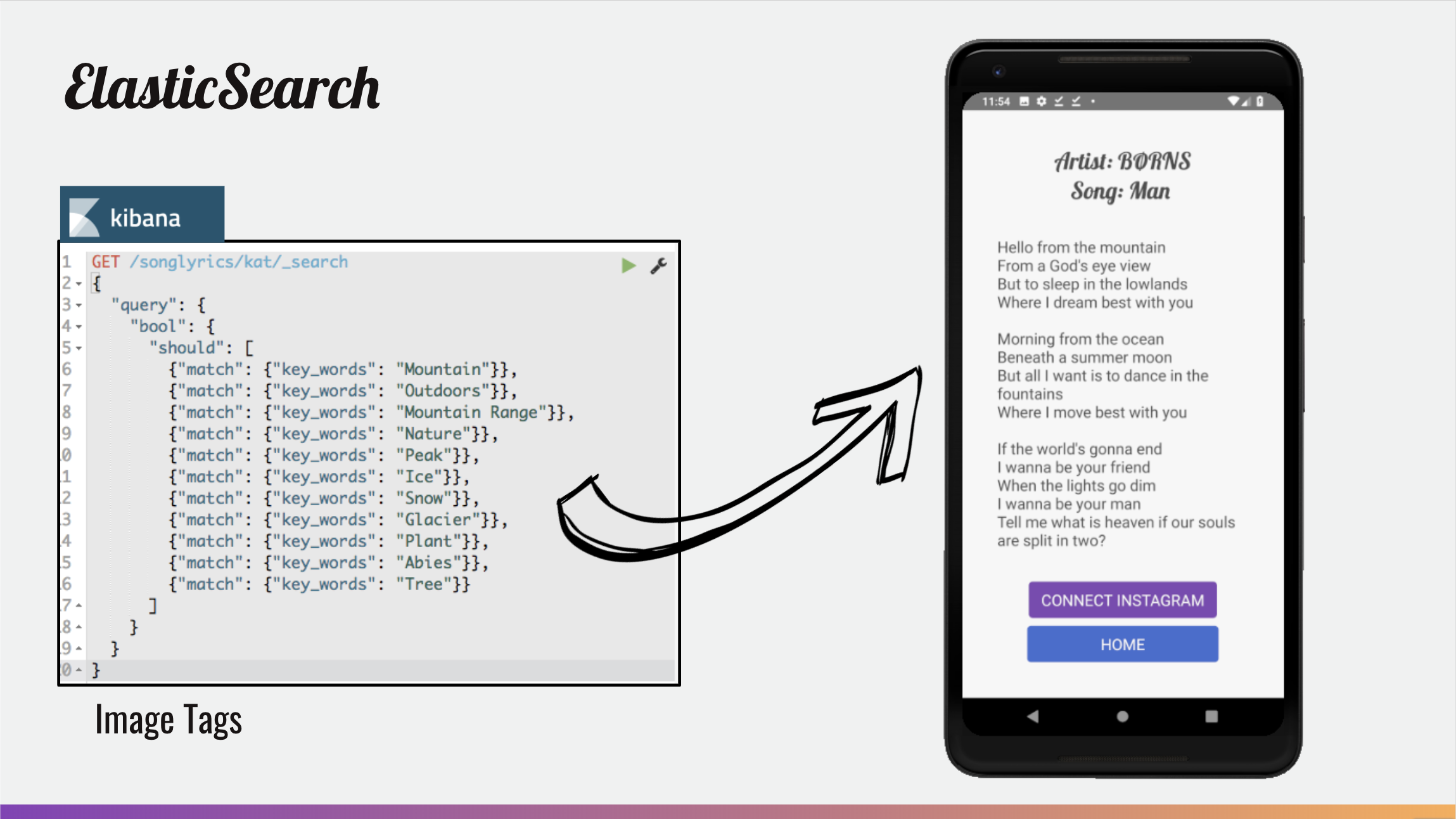

These Key Words are stored along side the full lyrics in a key:value format within the Amazon ElasticSearch Service.

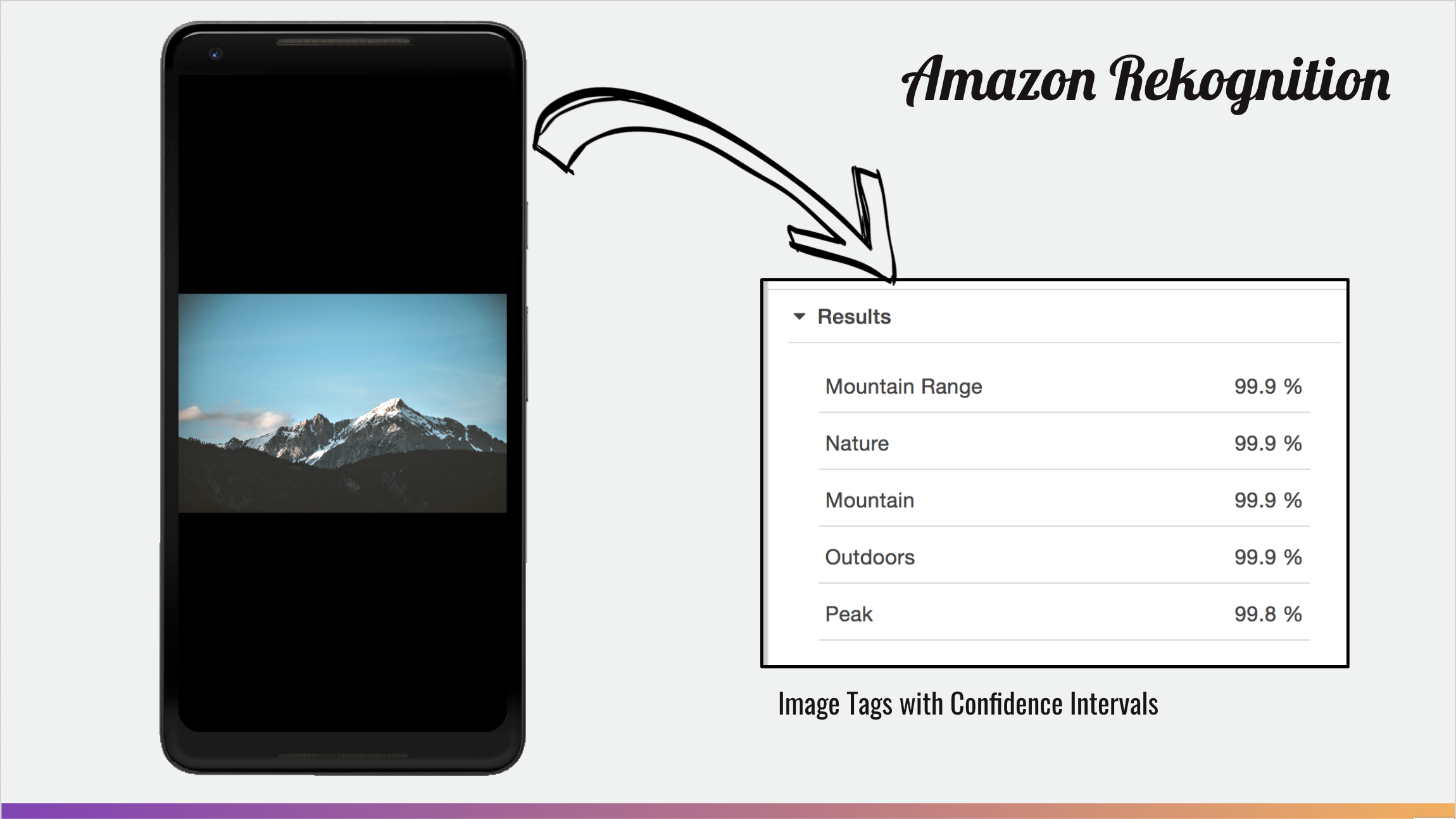

When a user selects Upload on the mobile application, they can take/upload a picture which is stored in an S3 bucket.

This action also sends the picture to Amazon Rekognition, which returns a list of Image Tags (objects, people, text, and scenery detected in the image alongside a confidence interval.)

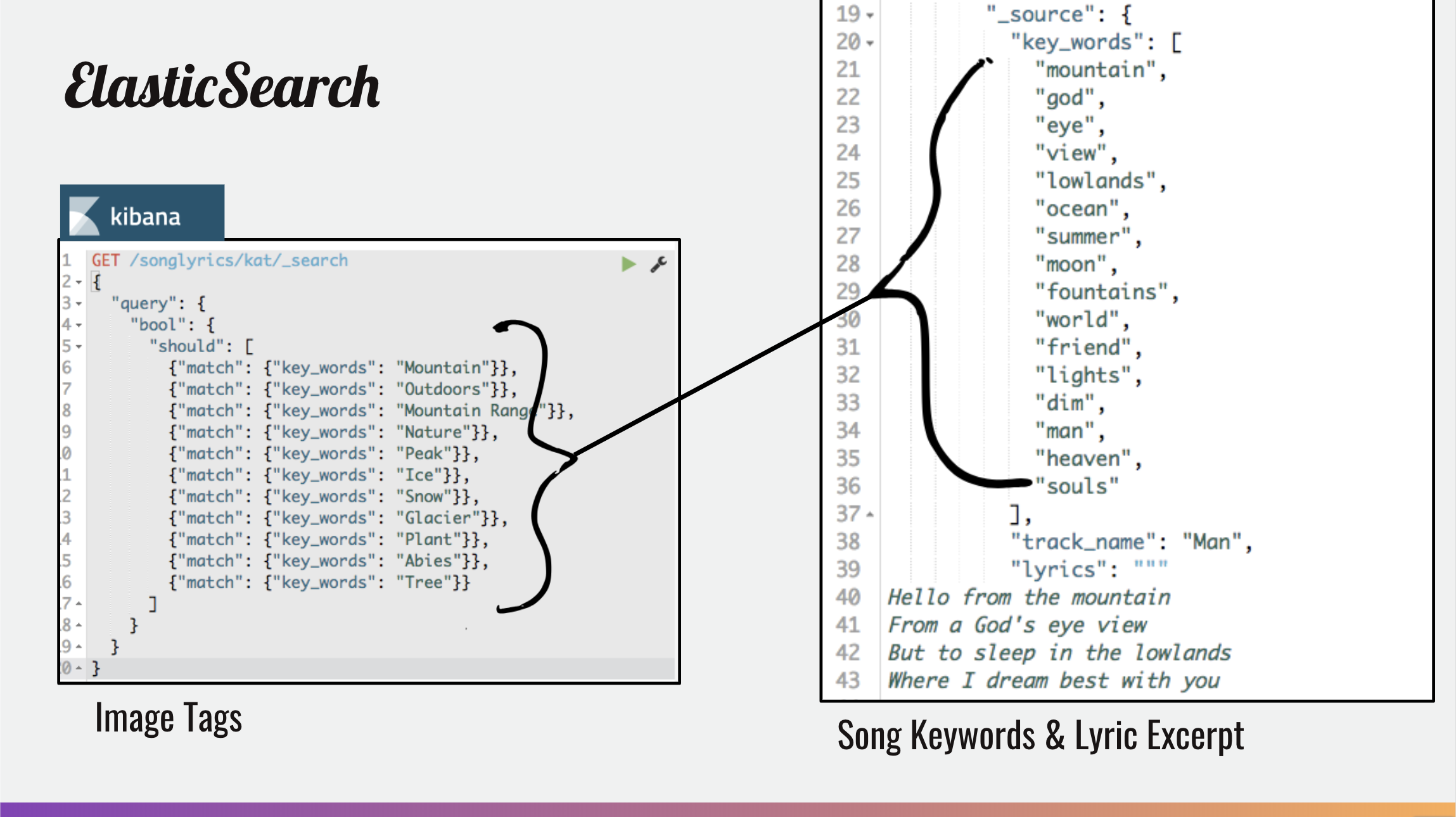

Elasticsearch is used to tie everything together. The services searches the repository of Song Lyrics/Key Words to find the closest match to the image based on its Image Tags.

Finally, the an excerpt of the song lyrics is returned to the user interface, where it can be used as a caption on the user's social media posts.